User input is the ability of computer programs to process human gestures, that humans perform via different physical devices. The most widely used such devices are the mouse, the keyboard and the touch screen. It is worth mentioning that whether a user performed some gesture with a specific physical device is practically irrelevant to the way in which this gesture is actually going to be processed by the computer program. For example: an OS may decide to convert touch input to mouse events, or mouse events and touch events to drag and drop events, or touch events to keyboard events etc. So in NOV the terms mouse, keyboard, touch and drag-and-drop should be understood as virtual devices.

In order for NOV to functionally integrate with a specific presentation layer, this presentation layer needs to support the mouse, keyboard, touch and drop-and-drop virtual devices. This does not mean that it actually needs to has a mouse, keyboard or ability for drag-and-drop. Silverlight for example has no built-in ability for drag-and-drop, but because the NOV host for Silverlight emulates the Microsoft Windows drag-and-drop in Silverlight, to NOV it appears that Silverlight does have built-in drag-and-drop.

NOV receives UI events from the virtual devices, through callback methods of the NKeyboard, NMouse and NDragDrop static classes. These events are originally captured by the windows peers, but instead of directly notifying the window to which the window peer belongs, the events are always first routed to the respective event dispatcher - NKeyboard, NMouse and NDragDrop, together with the window, the peer of which originally received the event.

By the time the events are send to the NOV dispatchers for processing, all UI events are already normalized. This means that the information associated with a specific event is already converted to exactly the same NOV format regardless of the integration platform. For example: the mouse coordinates in WinForms arrive in pixels, but are automatically converted to DIPs, the keyboard keys in WinForms, WPF and Silverlight are all completely different enums, but by the time they arrive in NOV they are all nicely packed as NKey instances etc. That is why the same NOV code can respond to UI events that are actually arriving from different presentation layers.

Whenever an UI event from a window peer arrives at the dispatcher, it always goes through a similar procedure, which basically includes these steps:

- Ensure the integrity of user input.

- Update the respective target for user input if needed.

- Raise action event(s) if needed.

In NOV a

target for user input is any element that derives from the

NInputElement class - see

Input Elements for more info.

To the DOM, the UI events dispatching process appears as set of events and extended property changes. All events that the UI events dispatching process raises are from the NEvent.UserInputCategoryEvent and as such can sink and bubble (see Events for more information about event categories and event routing).

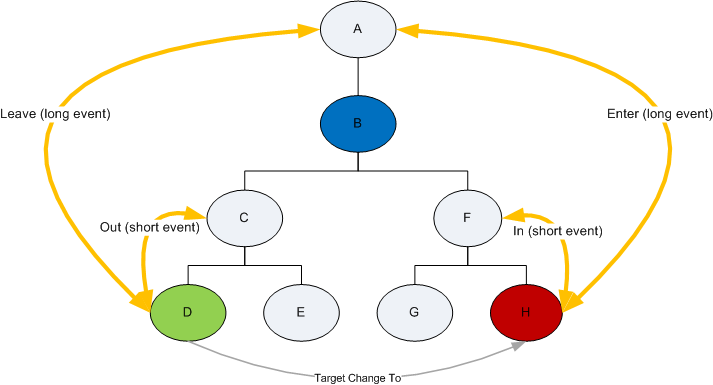

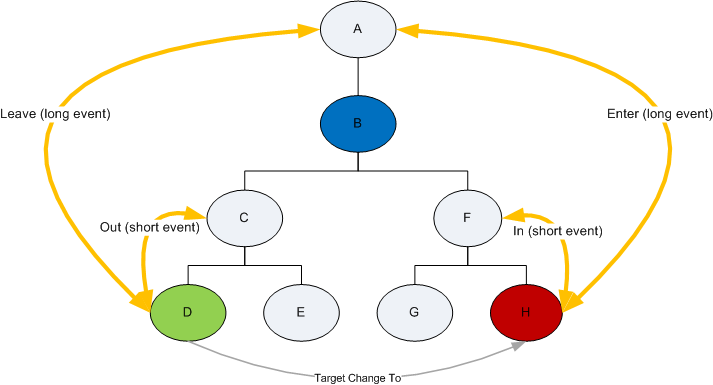

When a certain UI event dispatcher changes a certain input target (i.e. the NMouse changes the Mouse Over Target or the NKeyboard changes the Focus Target etc.), it follows a certain pattern, that consists of four events and two properties. Say for example that a UI event dispatcher needs to change an input target as illustrated in the following diagram:

The input target is changed from node D to node H. As part of this change the dispatcher will first raise the respective Leave event, which will sink and bubble along the entire route from node D to the root (node A). Then it will raise the respective Out event, which will sink and bubble up to the last common ancestor found in the D-to-root and H-to-root chains – in our case this is node B, hence the Out event route consists only of node C.

After the Leave/Out event pair is raised the dispatcher updates the Direct and Within extended properties pair. As part of this change the Direct property is cleared from node D and set to true for node H. The Within property is cleared from nodes D and C and set to true for nodes H and F. Note that it is assumed that nodes B and A had the Within property set to true when D was becoming the current target so there is no need to update them (i.e. when the Within property has a value of true for a certain node, this signals that the respective input target is within this node branch).

Similarly to the Leave/Out event pair the dispatcher finally raises the In/Enter events pair, which have the same routing strategies, but are raised in reverse order (i.e. first the In event and then the Enter event).

For example: suppose that the focus target changes from node D to node H. The NKeyboard will perform the following actions:

- Raise the NKeyboard.LostFocusEvent event for node D (routed from D to A).

- Raise the NKeyboard.FocusOutEvent event for node D (routed from D to C).

- Clear NKeyboard.FocusWithinPropertyEx from node D and C.

- Clear NKeyboard.FocusedPropertyEx from node D.

- Set NKeyboard.FocusedPropertyEx to true, for node H.

- Set NKeyboard. FocusWithinPropertyEx to true, for nodes H and F.

- Raise the NKeyboard.FocusInEvent event for node H (routed from H to F).

- Raise the NKeyboard.GotFocusEvent event for node H (routed from H to A).

The Leave/Out and In/Enter events are considered to be Target Change UI Events, since they are not directly associated with a real action that the user performs with a device - they are just notifications that the respective dispatcher is changing the current target for Action UI Events. Some input target changes are implicitly triggered by Action UI Events - for example the Mouse Over Target change, which may be performed when the mouse is moved inside a window peer. Other input target changes can be explicitly triggered - for example the Keyboard Focus Target change is performed when you focus the keyboard on a specific input target.

NOV objects dealing with User Input events, typically respect the Cancel flag (has the meaning of Handled in User Input) only for Action UI Events. It really does not make much sense to mark as "handled" events like Mouse Enter and Mouse In, since these are just notifications that the mouse over target is entering the node. Furthermore "handling" pairs of events, especially in the case when these events can be dispatched implicitly as part of Action UI Events (Mouse Move for example) is ambiguous in general. That is why you can expect NOV to raise the Cancel flag only for Action UI Events.

In a scenario in which Action UI Events can be processed by multiple targets, it is really needed to mark the respective event as "handled" by raising its Cancel property, to prevent subsequent handlers from performing any other actions. It helps you enforce the One User Action = One DOM Reaction pattern.

For example - suppose that Button A contains Button B, which are both clicked when pressed. Provided that NOV did not cancel (i.e. mark as handled) the MouseDown event, both Button B and Button A will get clicked on MouseDown in button B. Because the NOV button handles MouseDown, it arrives at button A handled, and the implementation does nothing, thus only button B is clicked.